Azure Functions and Microsoft Cognitive Services

Microsoft is releasing services in very fast pace. This means you really need to keep up with everything. A few weeks back I read about Azure Functions (explained in this blog post) and Microsoft Cognitive services.

Cognitive Services are services that developers can use to easily add intelligent features – such as emotion and sentiment detection, vision and speech recognition, knowledge, search and language understanding – into their applications.

With these services you can now easily build intelligent applications. Microsoft offers API’s in the following categories:

- Vision

- Speech

- Language

- Knowledge

- Search

All these categories contain a lot of services if you want to see the full list of API’s click on the following URL:

With some services it is possible to retrieve information about images that are uploaded on your website. The service can be that intelligent to recognize what is on the image.

This can be handy in combination with for example Azure Functions. Azure Functions can be triggered by Blob (Image) and the function can then process the image and save what is on that image. This information can then be saved to a storage location of your choosing.

To implement this you will have to Subscribe for Cognitive services. To subscribe go to this URL: https://www.microsoft.com/cognitive-services/ and click on “Get Started for Free”.

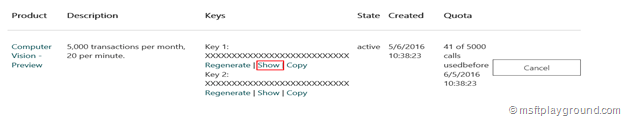

You will be redirected to a information page, read the information on the page and click “Let’s go” and sign-in with your Live ID. You will be redirected again but this time to a page with your subscriptions. On this page you can retrieve and regenerate the keys that are needed for doing requests to the Cognitive services of your current subscription.

To get a new subscription click “Request new trials”. Select the services you want to try out. In this example we will use the “Computer Vision – Preview”.

Back on the subscription page click on “Show” to show one of your keys and save it for later because we will need it to implement the http call.

Using the Cognitive services is basically doing a HTTP Post to a specific URL and supplying your key and image in the post. Documentation on how to use the different services work can also be found on the Cognitive Service site under “Documentation” (https://www.microsoft.com/cognitive-services/en-us/documentation).

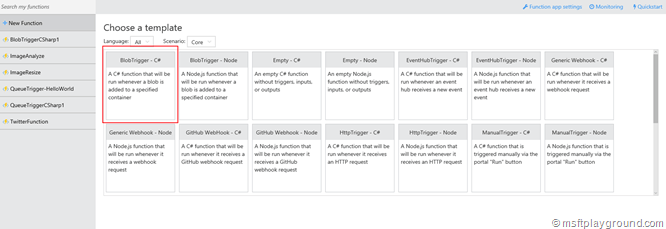

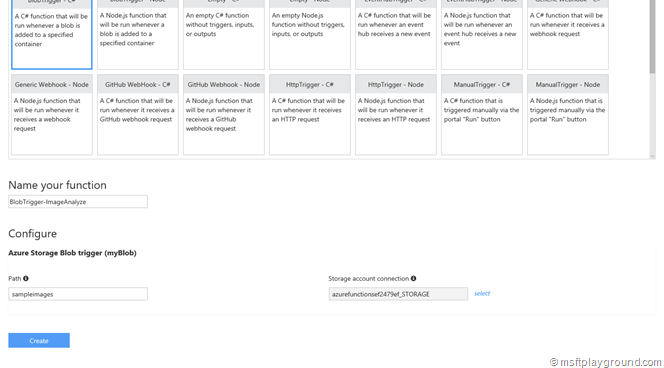

With the subscription in place we can start with the Azure Function. Within your Azure Functions container create a new Azure Function and choose the BlobTrigger.

Fill in the correct information and click on “Create”:

The Azure function will be created and you will be redirected to the development tab. On this tab add the following references. We will need these reference to create the Http Call and analyze the image.

#r "System.Web" using System.IO; using System.Net.Http; using System.Net.Http.Headers; using System.Web;

The next step is to adjust the default Run method to have a Stream input parameter instead of the string input.

public static void Run(Stream myBlob, TraceWriter log) {

//get byte array of the stream

byte[] image = ReadStream(myBlob);

//analyze image

string imageInfo = AnalyzeImage(image);

//write to the console window

log.Info(imageInfo);

}

The we can create the method for analyzing the image.

private static string AnalyzeImage(byte[] fileLocation) {

var client = new HttpClient();

var queryString = HttpUtility.ParseQueryString(string.Empty);

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", "{subscription key}");

queryString["maxCandidates"] = "1";

var uri = "https://api.projectoxford.ai/vision/v1.0/describe?" + queryString;

HttpResponseMessage response;

using (var content = new ByteArrayContent(fileLocation)) {

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

response = client.PostAsync(uri, content).Result;

string imageInfo = response.Content.ReadAsStringAsync().Result;

return imageInfo;

}

}

In the method we create a Http Post to the Cognitive service URL: https://api.projectoxford.ai/vision/v1.0/describe? we also add a query string named “maxCandidates” that tells the service the maximum number of candidate descriptions to be returned. The default is value of this parameter is 1.

In the header value “Ocp-Apim-Subscription-Key” the subscription key is placed. The file we would like to analyze is added to the post as a Byte Array.

With this function we can start creating a new Azure Function. The only method we still miss is a method to convert the stream into a byte array that is necessary to add in the HTTP Post (ReadStream).

public static byte[] ReadFully(Stream input) {

byte[] buffer = new byte[16 * 1024];

using (MemoryStream ms = new MemoryStream()) {

int read;

while ((read = input.Read(buffer, 0, buffer.Length)) > 0) {

ms.Write(buffer, 0, read);

}

return ms.ToArray();

}

}

With all these methods in place the function can be tested by uploading a image into the blob account.

In my test scenario’s I use a test image from Microsoft.

Analyzing this image will give the following result.

{

"description": {

"tags": [

"grass",

"dog",

"outdoor",

"frisbee",

"animal",

"sitting",

"small",

"brown",

"field",

"laying",

"orange",

"white",

"yellow",

"green",

"mouth",

"playing",

"red",

"holding",

"park",

"blue",

"grassy"

],

"captions": [

{

"text": "a dog sitting in the grass with a frisbee",

"confidence": 0.66584959582698122

}

]

},

"requestId": "38e74ca2-b114-4ebb-b74d-457cc9a2adc2",

"metadata": {

"width": 300,

"height": 267,

"format": "Jpeg"